(This is markuped, but otherwise exact, copy of the feature tracker issue item that you might know from sf.net.)

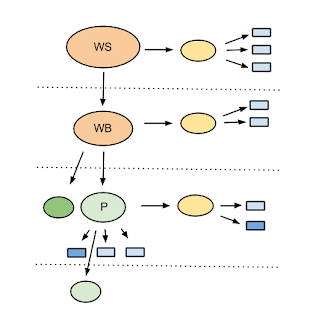

The Workbench Network

It would offer a lot of opportunities to be able to easily instantiate workbenches in a VM or the cloud. The benefits range from having a clearly visible and understandable security barrier to having a fully laid out cloud-workplace for newcomers to explore. A cloud-workspace would also be useful in an educational setting or collaboration in areas other than software development. Seeing the global resources as nodes that can be synced or read-only replicated to other systems supports this interesting perspective.

I'd like to paint a coarse picture of how a workbench network could look like. The tool possibilities this enables are vast, and I'll only scratch the surface of that topic.

But first a name change. I will refer to Workbench Bots what was previously known as Workbench Slaves. I've created a Wiki page (Glossary) for naming ideas and discussions to keep that out of the drafting process.

The Workshop Node

The global resources belong to a WorkShop Node (WSN). This is your personal work-hub, the nerve center for all your workbenches. The resources managed by the WSN could be roughly separated into three categories: Configuration & Data, Tools, and Active Parts.

Configuration & Data

This is subject to syncing and/or replication. Configuration is a core responsibility of the workbench application and what facilities will be provided can certainly fill a separate topic.

Tools

Global tool usage could simply be recorded and synced with other data. Instead of syncing the tool fleet on every node, it would be possible to browse the tools used on other nodes in a general tool installation dialog.

Active Parts

Although all of the WSN would reside in a single location, and would therefore be switchable, you will certainly want to use just one (no configuration duplication). The configuration for active parts (start/stop workbenches, notification handling, cron-jobs, etc) could be arranged in profiles that itself could be started and stopped. This would also allow you to switch, for example, between private and work profiles.

Drones

The master record of your WSN could be somewhere online which would allow you to fully sync the WSN to multiple devices without much hassle. In addition to the WSN, it would be possible to have parts of the WSN be read-only instantiated on Drone nodes. These nodes can be on VMs or in the cloud and would offer the mentioned easy to understand security container where only notifications are routed back to your WSN. No automatic syncing of other resources would take place. Note that these nodes are usually accessible from a device where you have a fully capable WSN running -- in another browser window or on the host of the VM. These drones are container nodes where you want to do actual work, but where the global resources are injected from your full WSN.

Drones could be managed with profiles that describe what tools to install initially, what parts of the configuration to push, and which notifications will be forwarded to the WSN. A special VM image could be kept up to date. When you want to explore a project that you cannot fully trust, the image could be cloned for that purpose.

The WSN would know what drones and workbenches are available and could display status info about them.

Your tool fleet might include patched tools or extensions for tools managed with a workbench (e.g. a patched Vim or a workbench of Vim-Vundles (Vim extensions distributed through git)). To have those tools available on a Drone, it would be possible to push Workbench Bots there (remember: lightweight, raw workbenches that are completely independent and also used for automated tasks and deployment pipelines)

Cloud Workplaces

Projects could prepare a cloud workplace with web-based tools (or configuration for tool-categories with default tool selection). Users could explore the project without even having the workbench application installed locally. On the other hand, workbench application users could use the WSN to push their tool-configuration to that cloud workplace, manage credentials, and receive notifications (e.g. a review status change could be routed through the cloud workbench back to your WSN)

The WSN is not a workbench

It has different and a lot less responsibilities. However, it would certainly be advantageous to have some facilities like the navigation parts and k-loc managers (VCS/sync control of known locations) of the workbench there too. So it probably is a special, stripped down workbench. The default would be to not version-control any parts of the WSN and the online storage that is used to sync the WSN could do incremental backups, for example. Advanced users could gradually put k-locs under version control.

More on security

The WSN will probably manage credentials too. After all, you would want to be able to instantiate multiple workbenches of the same project. You would not want your whole workbench live to fall into the wrong hands. So, maybe further means of compartmentalization can be incorporated. For example, a credentials archive for projects the user is not currently actively involved in.

The devices the WSNs are on can provide additional means of security to shield against unauthorized access (locking, encryption). In addition to that, a mobile device could be a notification receiver without accessing the WSN.

How fine grained the permissions/capabilities of Drones have to be remains to be seen. Viewing drones as accessible from a device with a full WSN and having notification receivers separate from that will cover a lot. The possibility of fine-grained permissions can be another guiding principle for structuring the layout of the WSN.

Conclusion

So, what I'm saying is that the global node can be organized as well. That again offers a lot of opportunities in the tool space.